![Vector Database: A Big Data Analytics Solution for AI Agents [3/3 - anomaly]](https://res.cloudinary.com/dwid2xvok/image/upload/v1764212703/n8n/screenshots/vector-database-as-a-big-data-analysis-tool-for-ai-agents-33-anomaly.png)

Vector Database: A Big Data Analytics Solution for AI Agents [3/3 - anomaly]

Finalizes the implementation of a vector database for large-scale data analysis, with an emphasis on identifying anomalies for AI agents.

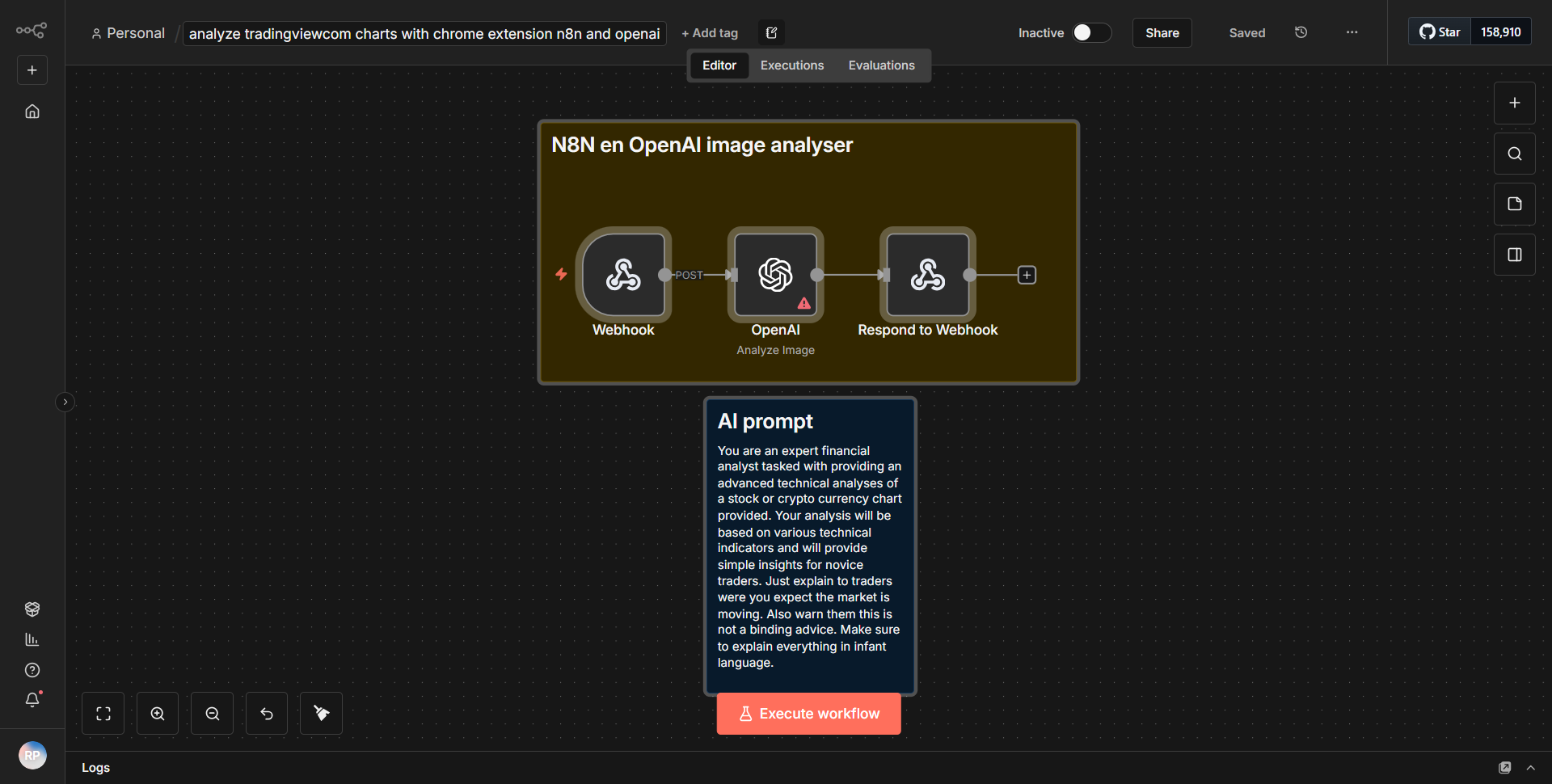

How it works

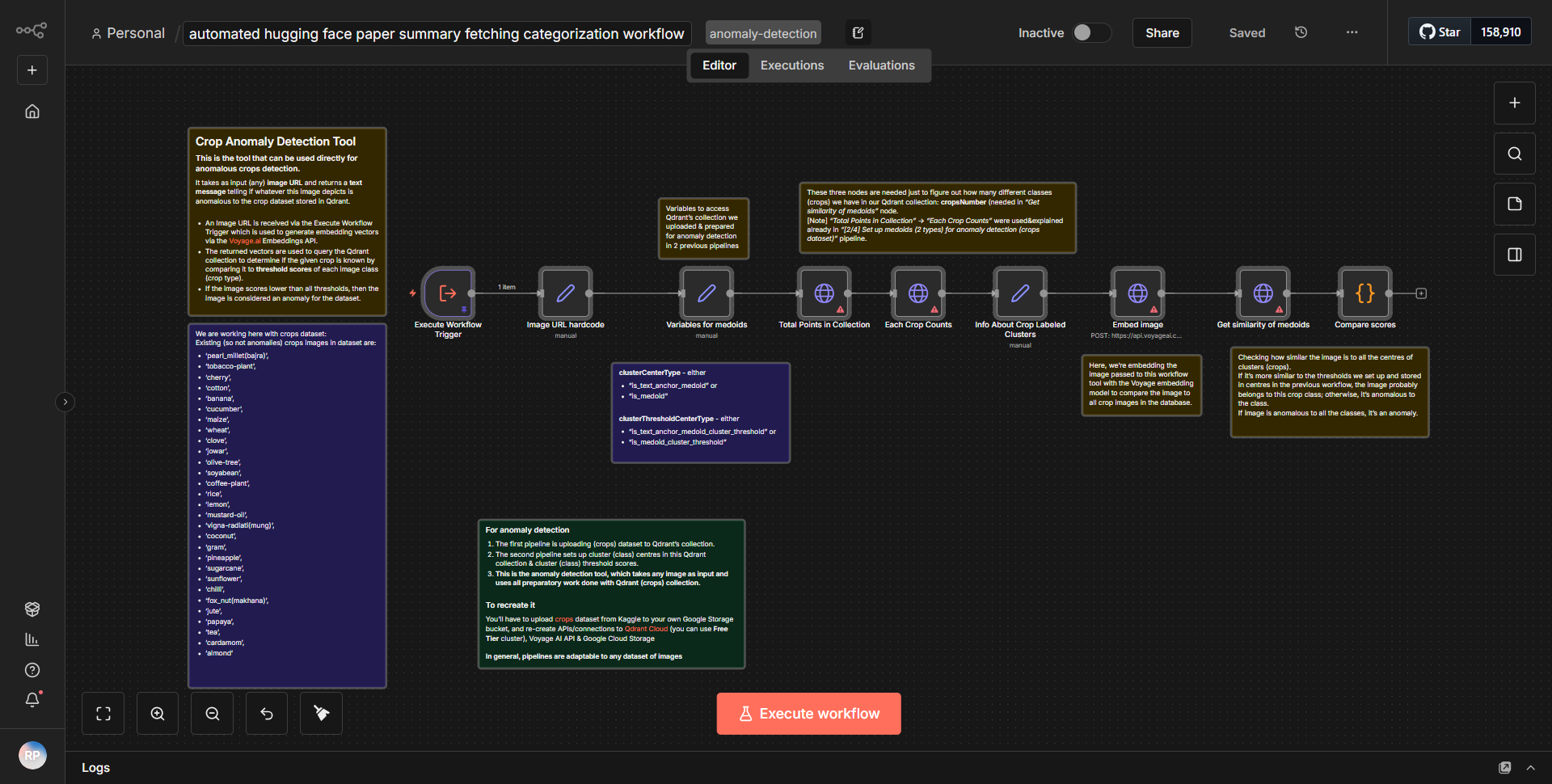

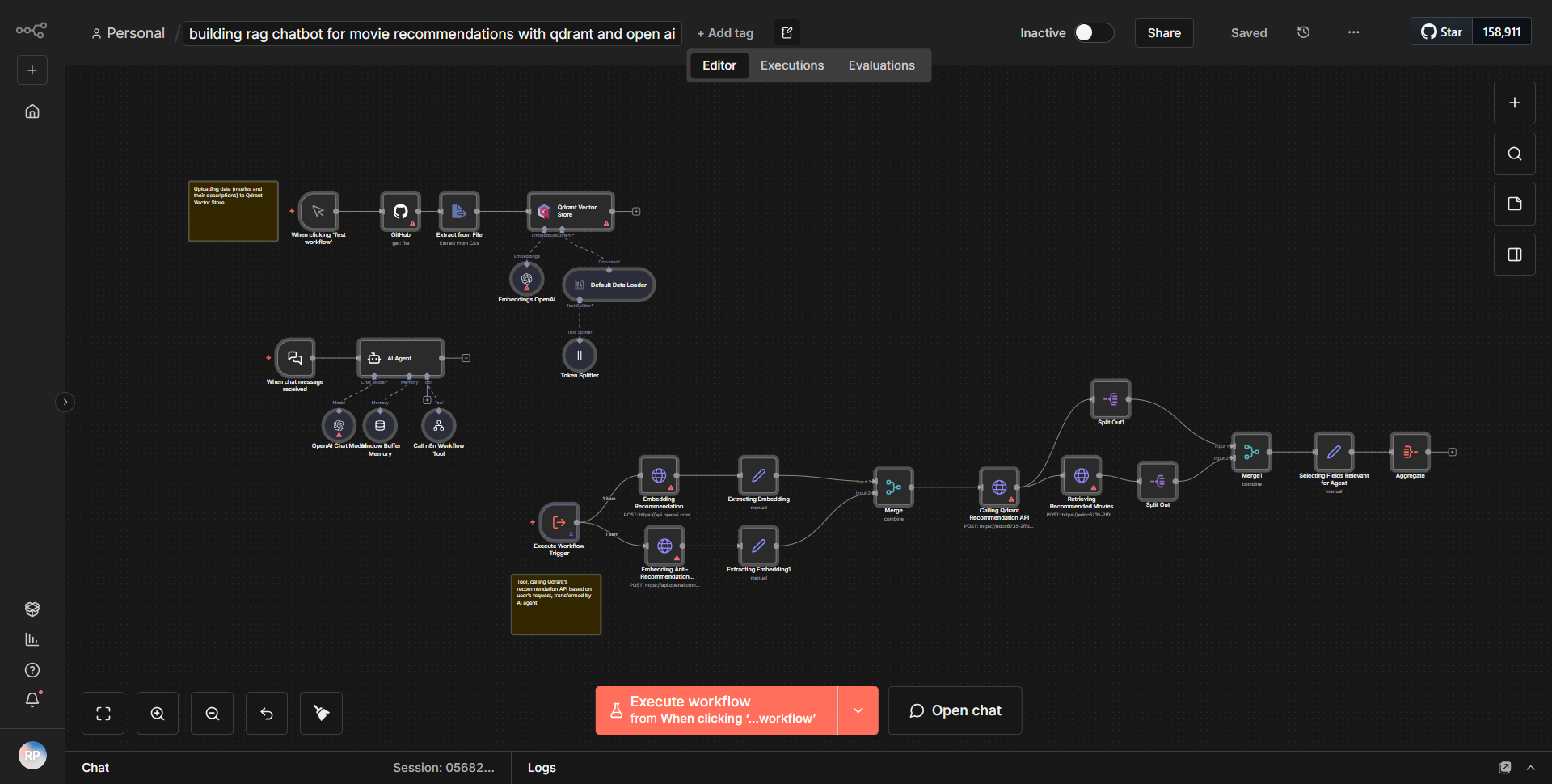

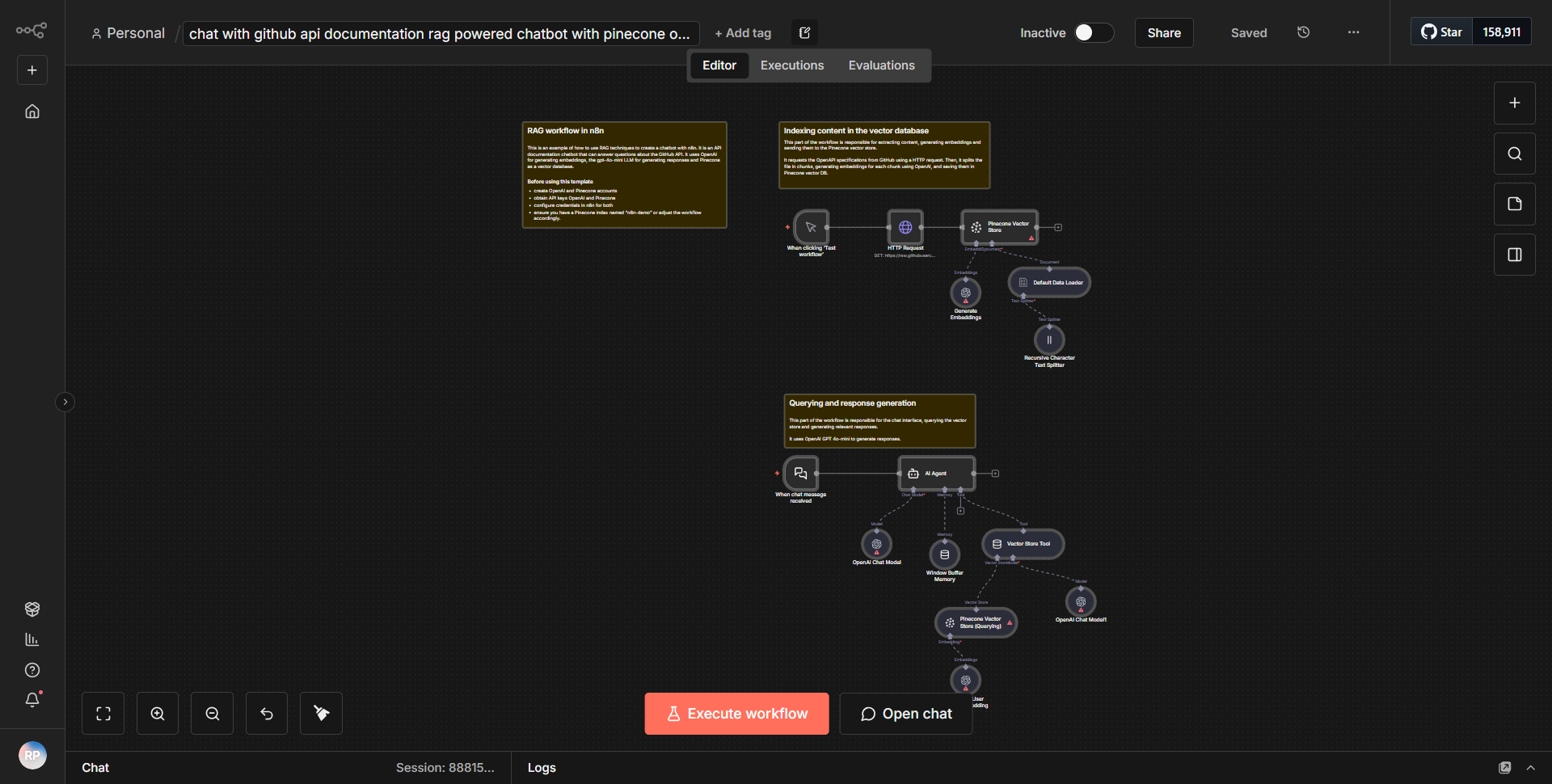

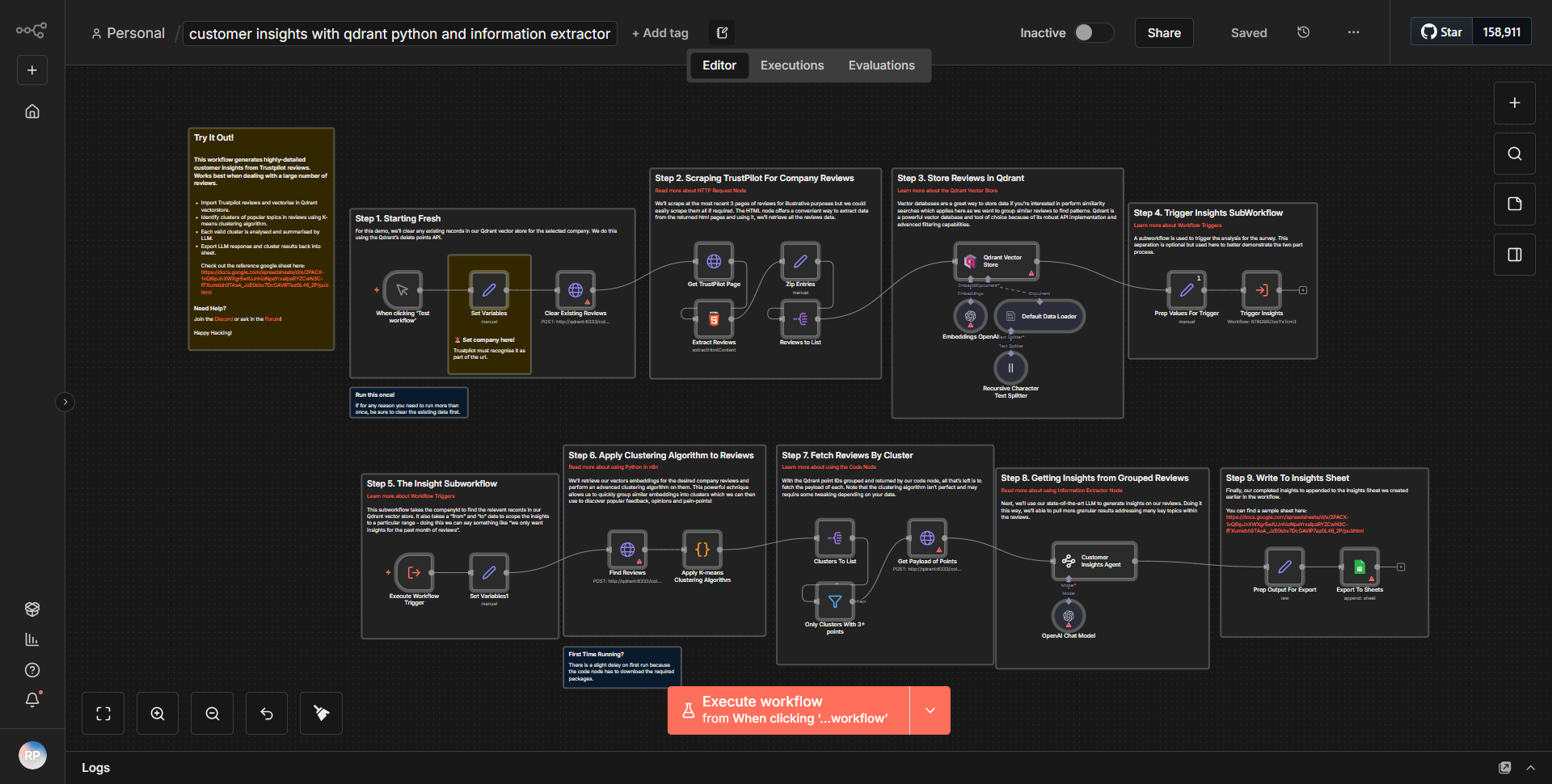

The workflow titled "Vector Database: A Big Data Analytics Solution for AI Agents [3/3 - anomaly]" is designed to finalize the implementation of a vector database aimed at large-scale data analysis, particularly focusing on anomaly detection for AI agents. The workflow operates in a sequential manner, utilizing various nodes to process data effectively.

1. Data Input:

The workflow begins with a node that retrieves data from a specified source, which could be a database or an API. This node is responsible for fetching the necessary datasets that will be analyzed for anomalies.

2. Data Processing:

Once the data is retrieved, it is passed to a processing node that prepares the data for analysis. This may involve cleaning the data, transforming it into the required format, and possibly normalizing it to ensure consistency across the dataset.

3. Vectorization:

The next step involves vectorizing the processed data. This is crucial as it converts the data into a format that can be analyzed for patterns and anomalies. The vectorization node utilizes specific algorithms to create vectors that represent the data points.

4. Anomaly Detection:

After vectorization, the workflow employs an anomaly detection algorithm. This node analyzes the vectors to identify any outliers or anomalies within the dataset. The results of this analysis are critical for understanding unusual patterns that may indicate issues or opportunities.

5. Output Generation:

Finally, the workflow concludes with a node that generates output based on the results of the anomaly detection. This output can be in various formats, such as reports, alerts, or data visualizations, depending on the requirements of the AI agents that will utilize this information.

Throughout this process, data flows seamlessly from one node to the next, ensuring that each step builds upon the previous one, culminating in a comprehensive analysis of the dataset.

Key Features

- Anomaly Detection:

The primary feature of this workflow is its ability to detect anomalies within large datasets, making it invaluable for AI agents that need to identify unusual patterns or behaviors.

- Vectorization:

The workflow incorporates advanced vectorization techniques, allowing for efficient data representation that facilitates deeper analysis.

- Scalability:

Designed for large-scale data analysis, the workflow can handle extensive datasets, making it suitable for big data applications.

- Automation:

The workflow automates the entire process from data retrieval to anomaly detection, reducing manual intervention and increasing efficiency.

- Customizable Outputs:

The final output can be tailored to meet specific needs, whether for reporting, alerts, or further data processing.

Tools Integration

The workflow integrates several tools and services through specific n8n nodes:

- HTTP Request Node:

Used to fetch data from external APIs or databases.

- Function Node:

Utilized for data processing and transformation tasks.

- Vectorization Node:

Implements algorithms for converting data into vector format.

- Anomaly Detection Node:

Analyzes the vectors to identify anomalies.

- Output Node:

Generates the final results in the desired format.

API Keys Required

The workflow does not specify any API keys or authentication credentials required for its operation. It is assumed that the necessary access to data sources is already configured within the environment where the workflow is deployed.

![Vector Database: A Big Data Analytics Solution for AI Agents [3/3 - anomaly]](https://res.cloudinary.com/dwid2xvok/image/upload/v1764212703/n8n/screenshots/vector-database-as-a-big-data-analysis-tool-for-ai-agents-33-anomaly.png)