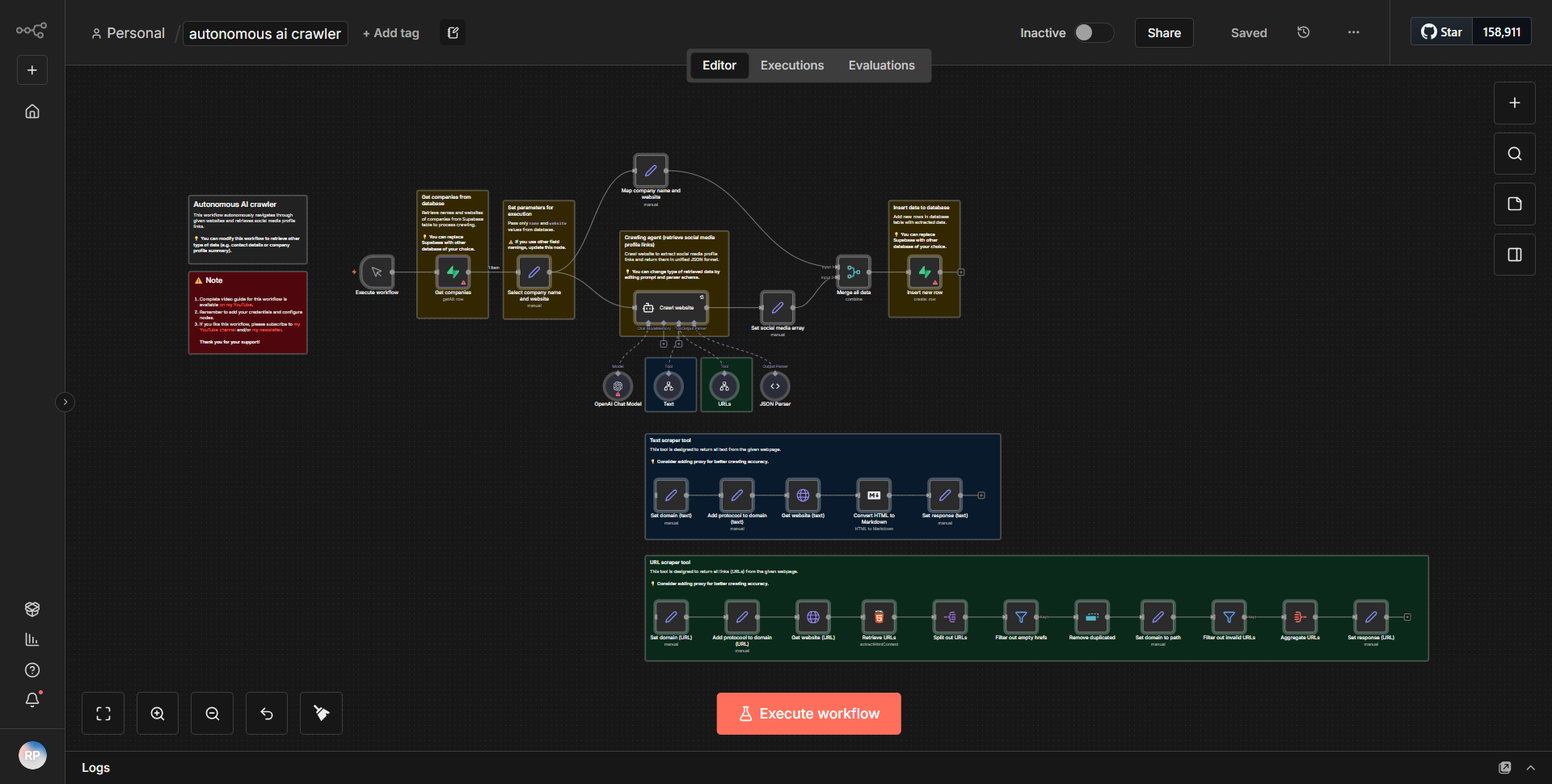

Self-sufficient AI web crawler

A self-sufficient AI-driven web scraper for gathering and analyzing data.

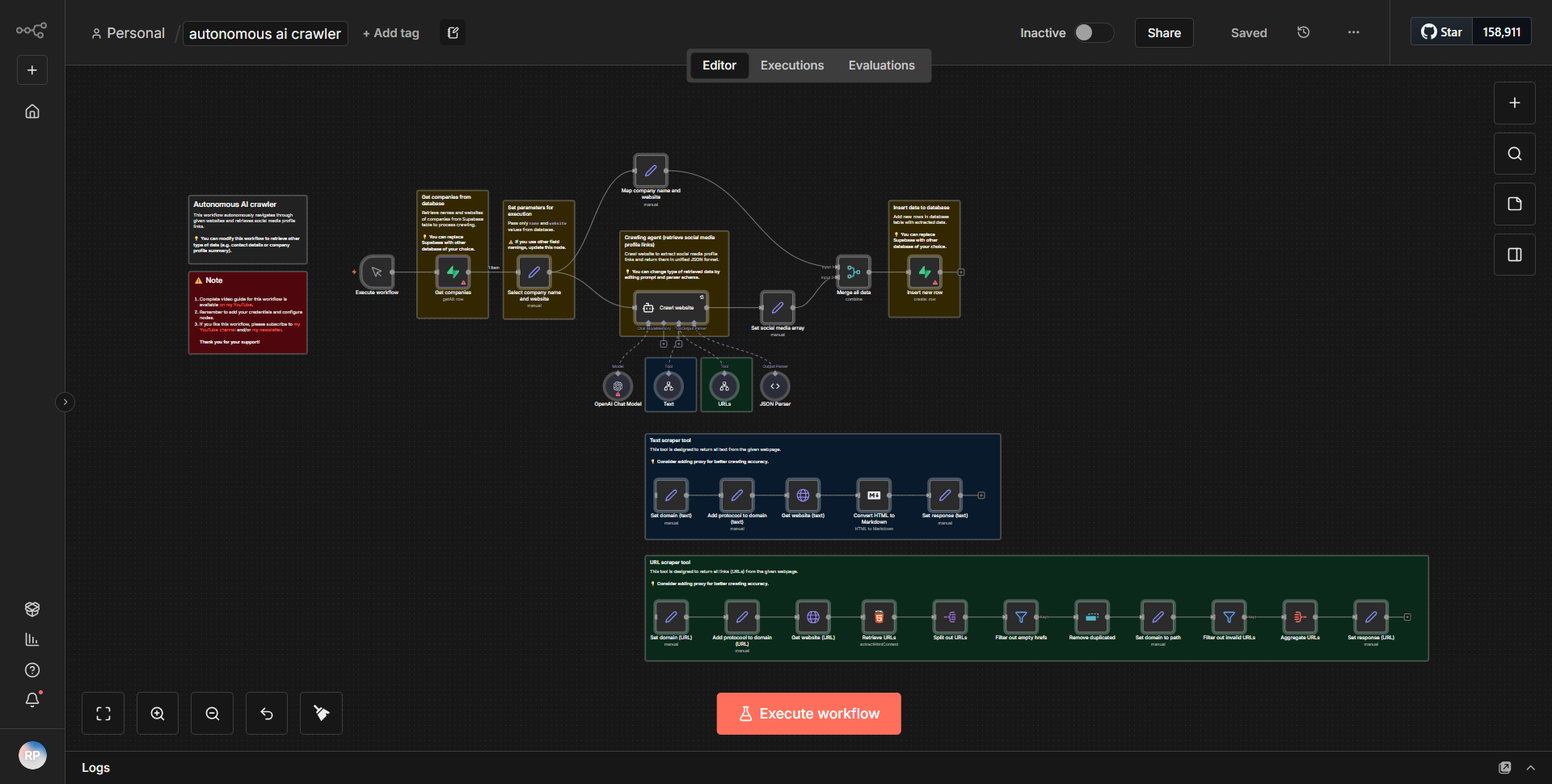

How it works

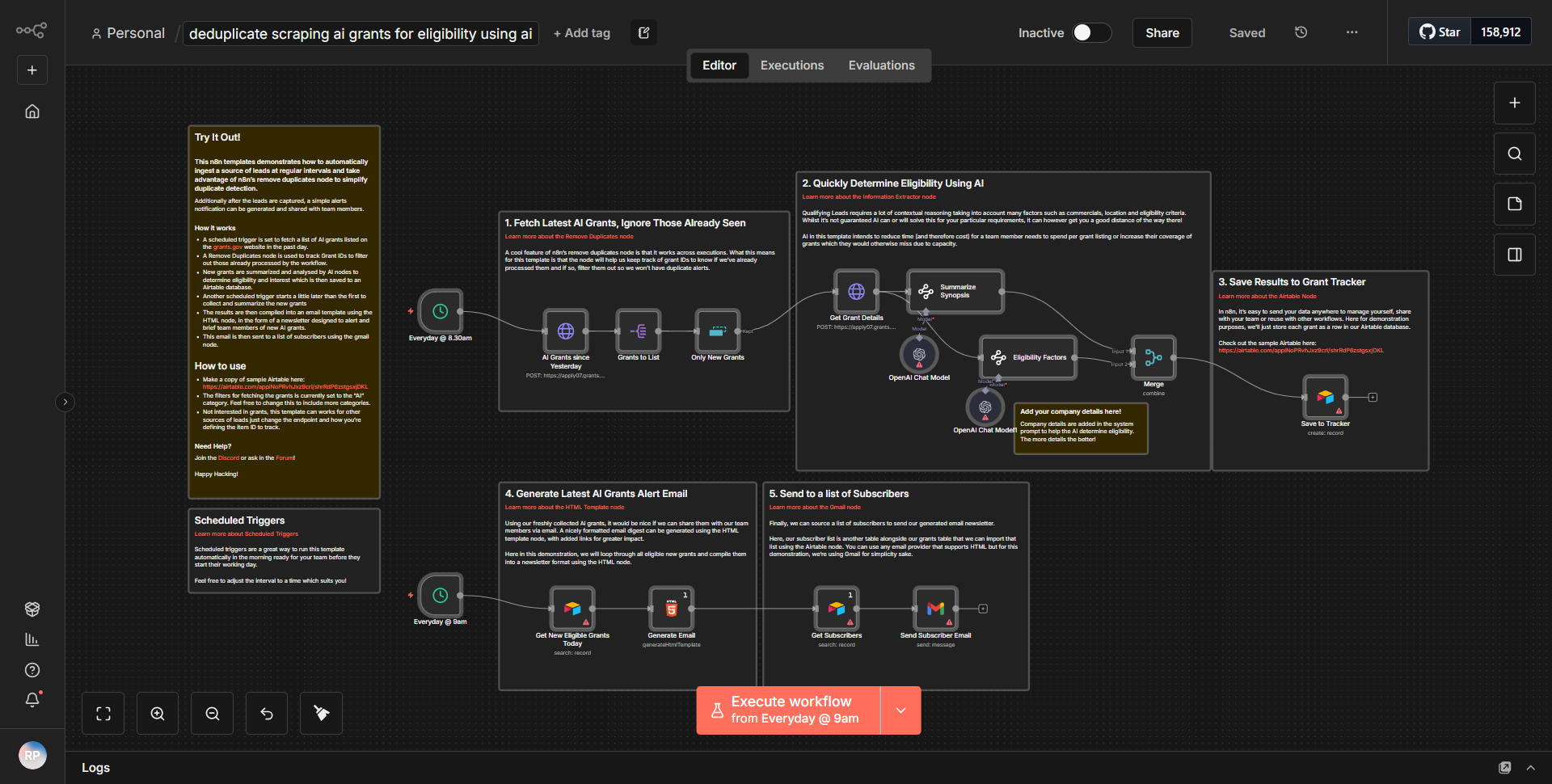

The "Self-sufficient AI web crawler" workflow operates as an autonomous web scraper designed to gather and analyze data from the internet. The workflow begins with a trigger node that initiates the scraping process based on a defined schedule or event. Once triggered, the workflow follows a systematic flow of data through various nodes.

1. Start Node:

The workflow is initiated either on a schedule or via a webhook, depending on the configuration.

2. HTTP Request Node:

This node is responsible for sending a request to the target website. It retrieves the HTML content of the specified URL.

3. HTML Extract Node:

After obtaining the HTML content, this node parses the data to extract relevant information such as titles, links, or specific text elements based on predefined selectors.

4. Function Node:

This node processes the extracted data further, applying any necessary transformations or calculations. It may also include logic to filter or format the data for better usability.

5. Data Storage Node:

The processed data is then stored in a database or a cloud service for future reference and analysis. This could involve nodes like Google Sheets, Airtable, or a custom database integration.

6. Notification Node:

Finally, the workflow may include a notification system that alerts the user about the completion of the scraping task or any significant findings. This could be through email, Slack, or another messaging service.

The nodes are interconnected in a linear fashion, ensuring that data flows seamlessly from one step to the next, allowing for efficient data collection and processing.

Key Features

- Autonomous Operation:

The workflow is designed to run without manual intervention, making it suitable for continuous data gathering.

- Data Extraction:

Capable of extracting specific data points from web pages using customizable selectors, allowing users to tailor the scraping process to their needs.

- Data Processing:

Includes functionality for processing and transforming the extracted data, ensuring that it is in a usable format for analysis.

- Storage Integration:

Supports various storage solutions, enabling users to save their data in preferred formats and locations for easy access and analysis.

- Notification System:

Provides alerts and notifications upon completion of tasks or when specific conditions are met, keeping users informed of the workflow's status.

Tools Integration

The workflow integrates with several tools and services to enhance its functionality:

- HTTP Request Node:

Used to fetch data from target websites.

- HTML Extract Node:

Parses HTML content to extract relevant data.

- Function Node:

Performs custom data processing and transformations.

- Database Nodes:

Integrates with services like Google Sheets or Airtable for data storage.

- Notification Nodes:

Sends alerts via email or messaging platforms like Slack.

API Keys Required

No API keys or authentication credentials are required for the basic functionality of this workflow. However, if the workflow integrates with specific services (like Google Sheets or Airtable), users will need to provide the necessary API keys or authentication tokens for those services to enable data storage and retrieval.