Extract and condense articles from a news website lacking an RSS feed utilizing AI, and store the results in NocoDB.

Extracts and condenses news articles that lack RSS feeds by utilizing AI, with the results stored in NocoDB.

How it works

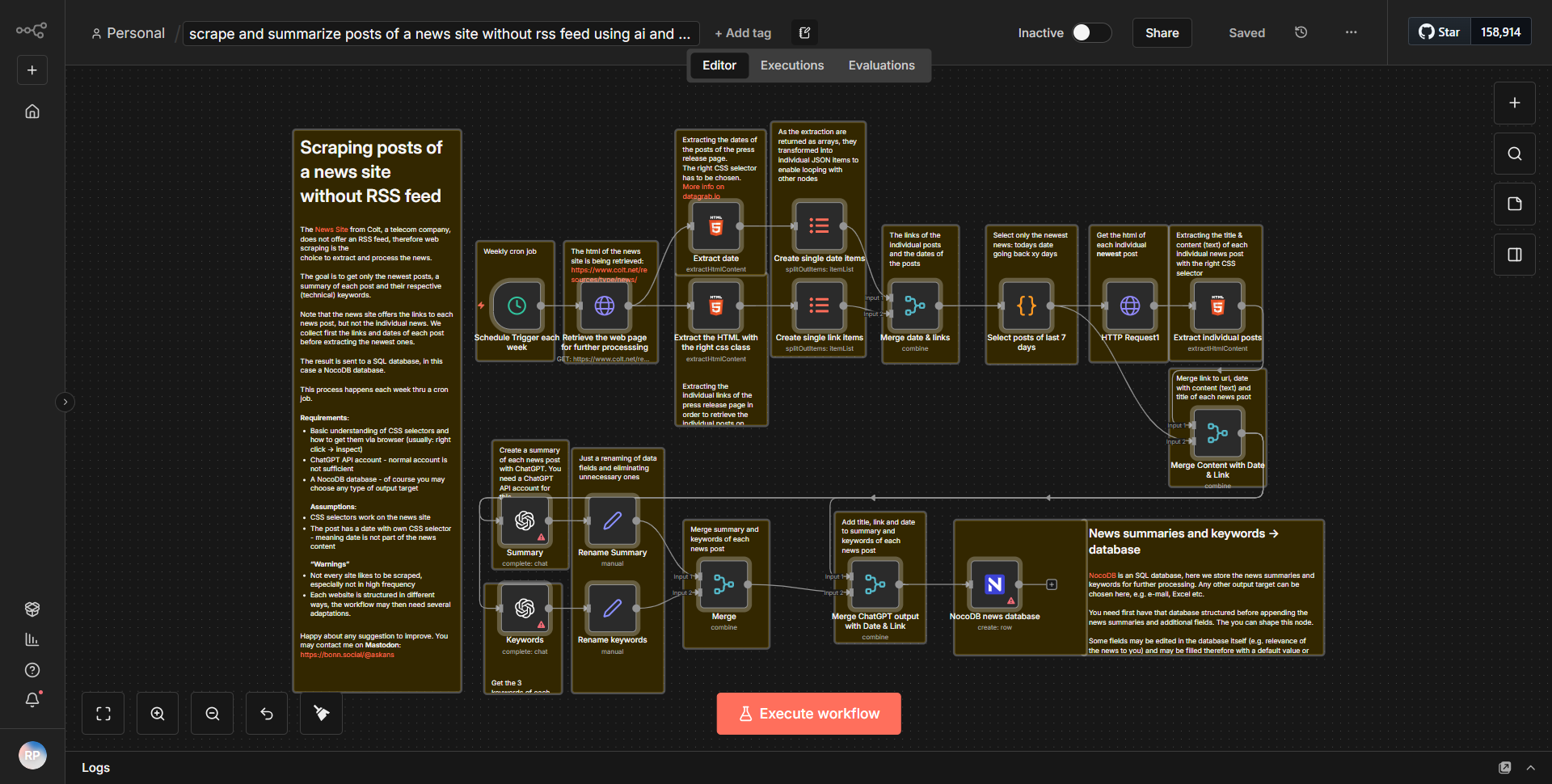

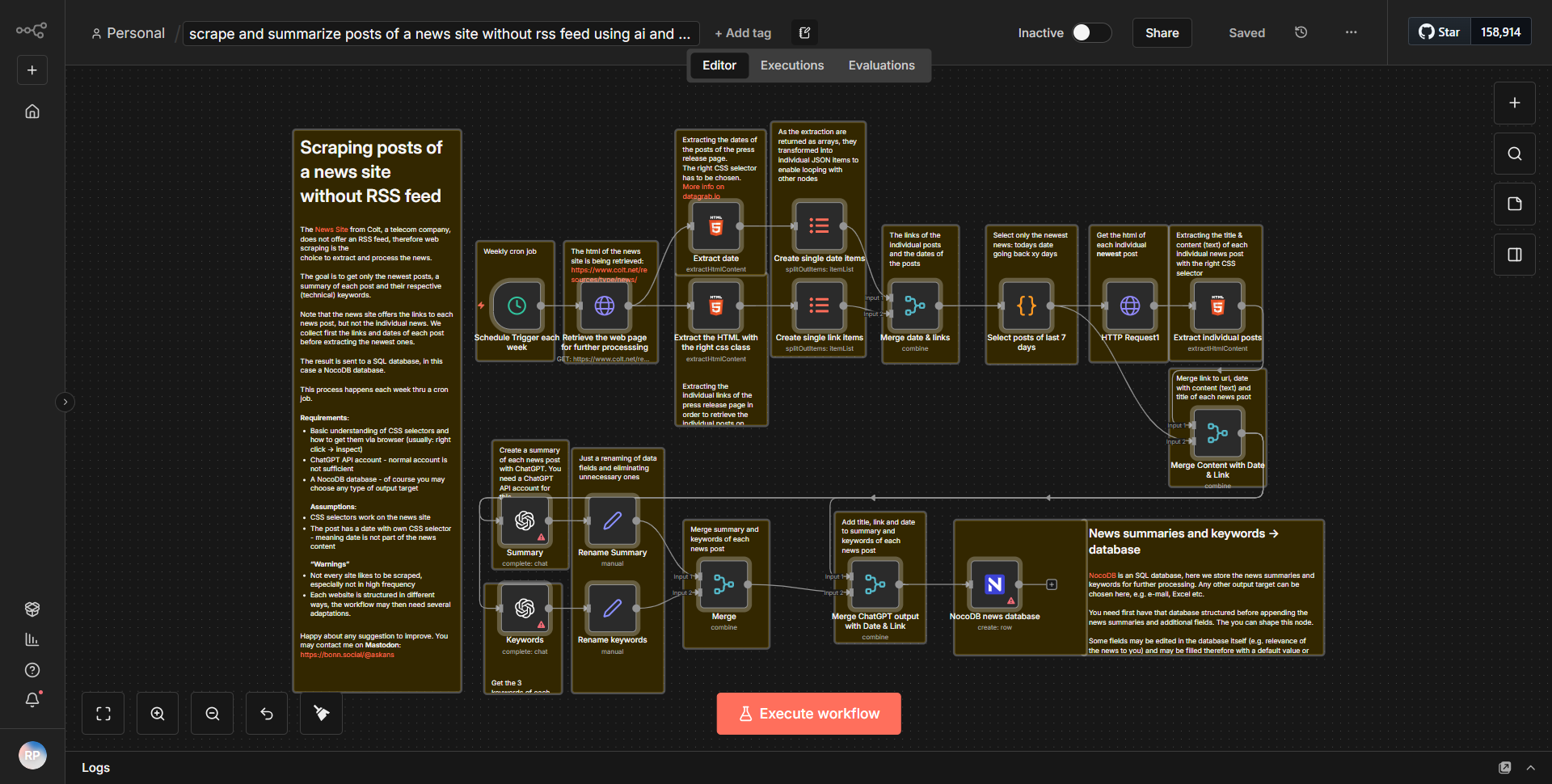

The workflow begins with a

HTTP Request

node that is configured to fetch the HTML content of a specified news website that does not provide an RSS feed. This node is set to perform a GET request to the target URL, retrieving the entire page content. The output of this node is the raw HTML, which is then passed to the next node for processing.Following the HTTP Request node, there is a

HTML Extract

node. This node is responsible for parsing the HTML content retrieved from the previous step. It utilizes CSS selectors to identify and extract specific elements from the HTML, such as article titles, publication dates, and the main body of the articles. The extracted data is structured in a more manageable format, typically as JSON.Next, the workflow includes a

Function

node that processes the extracted data further. In this node, the articles are condensed using AI techniques. This could involve summarizing the content or extracting key points, depending on the specific implementation. The output of this node is a summarized version of the articles, ready for storage.The final step in the workflow is a

NocoDB

node, which is used to store the summarized articles in a NocoDB database. This node is configured to create new records in a specified table, where each record corresponds to a summarized article. The data sent to NocoDB includes the title, summary, and any other relevant metadata extracted earlier.Throughout the workflow, data flows sequentially from one node to the next, transforming raw HTML into structured, summarized content that is stored in a database for easy access and management.

Key Features

1. AI-Powered Summarization:

The workflow utilizes AI techniques to condense lengthy articles into concise summaries, making it easier for users to grasp the essential information quickly.

2. HTML Content Extraction:

By scraping HTML content directly from websites lacking RSS feeds, the workflow can gather news articles from a variety of sources that would otherwise be inaccessible.

3. Integration with NocoDB:

The ability to store summarized articles in NocoDB allows for organized data management and easy retrieval, facilitating further analysis or reporting.

4. Customizable Data Extraction:

The use of CSS selectors in the HTML Extract node enables users to customize which elements of the articles they wish to extract, providing flexibility based on different website structures.

5. Automated Workflow:

The entire process is automated, reducing the need for manual data collection and summarization, thus saving time and effort for users.

Tools Integration

- HTTP Request Node:

Used to fetch HTML content from the specified news website.

- HTML Extract Node:

Parses the HTML and extracts relevant article data using CSS selectors.

- Function Node:

Processes the extracted data and utilizes AI for summarization.

- NocoDB Node:

Stores the summarized articles in a NocoDB database for structured data management.

API Keys Required

No API keys or authentication credentials are required for this workflow to function. The nodes used operate without the need for external API access, relying solely on the HTTP request to the news website and the integration with NocoDB for data storage.