Web scraping AI agent

Web scraping AI agent.

How it works

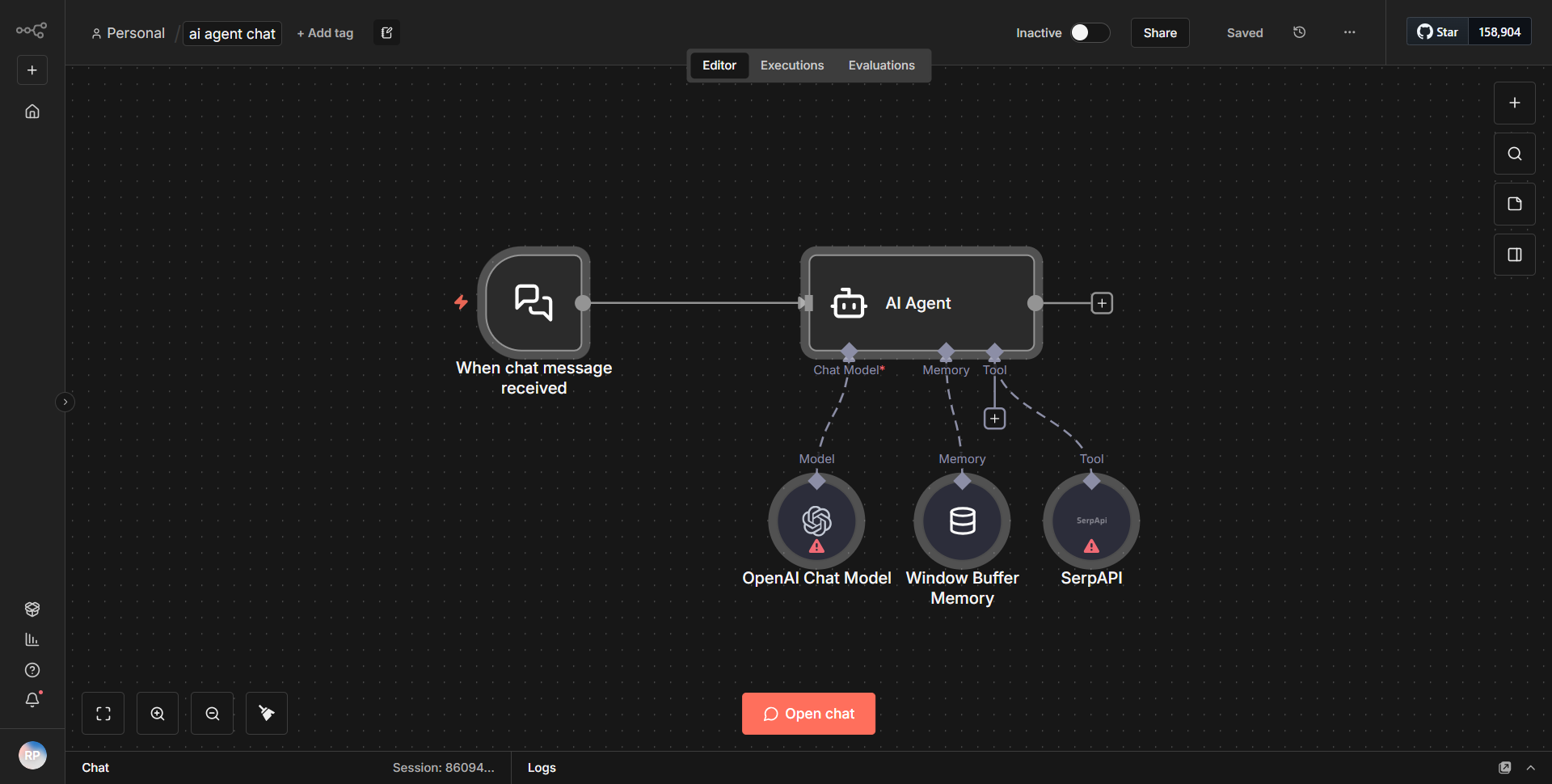

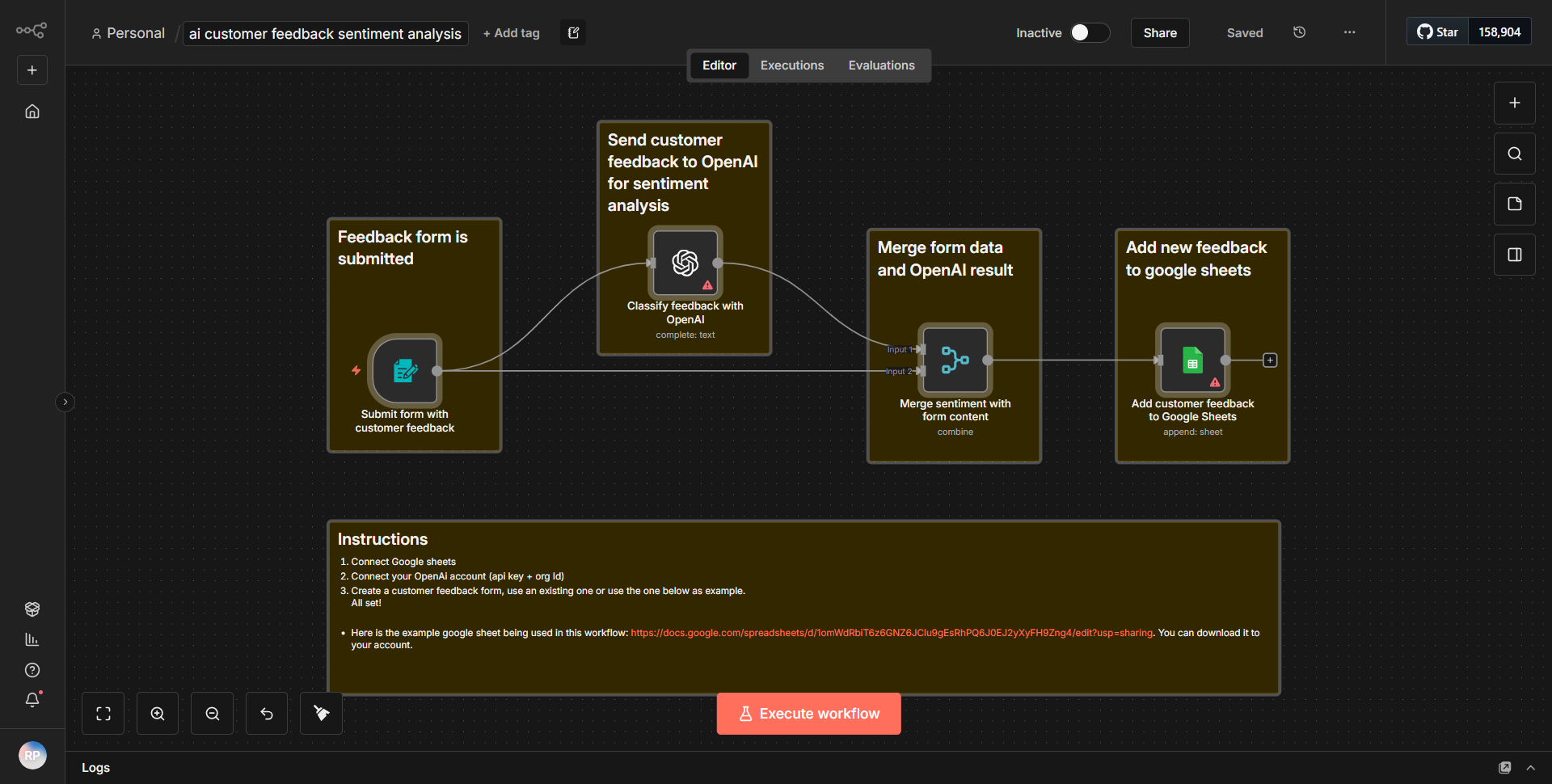

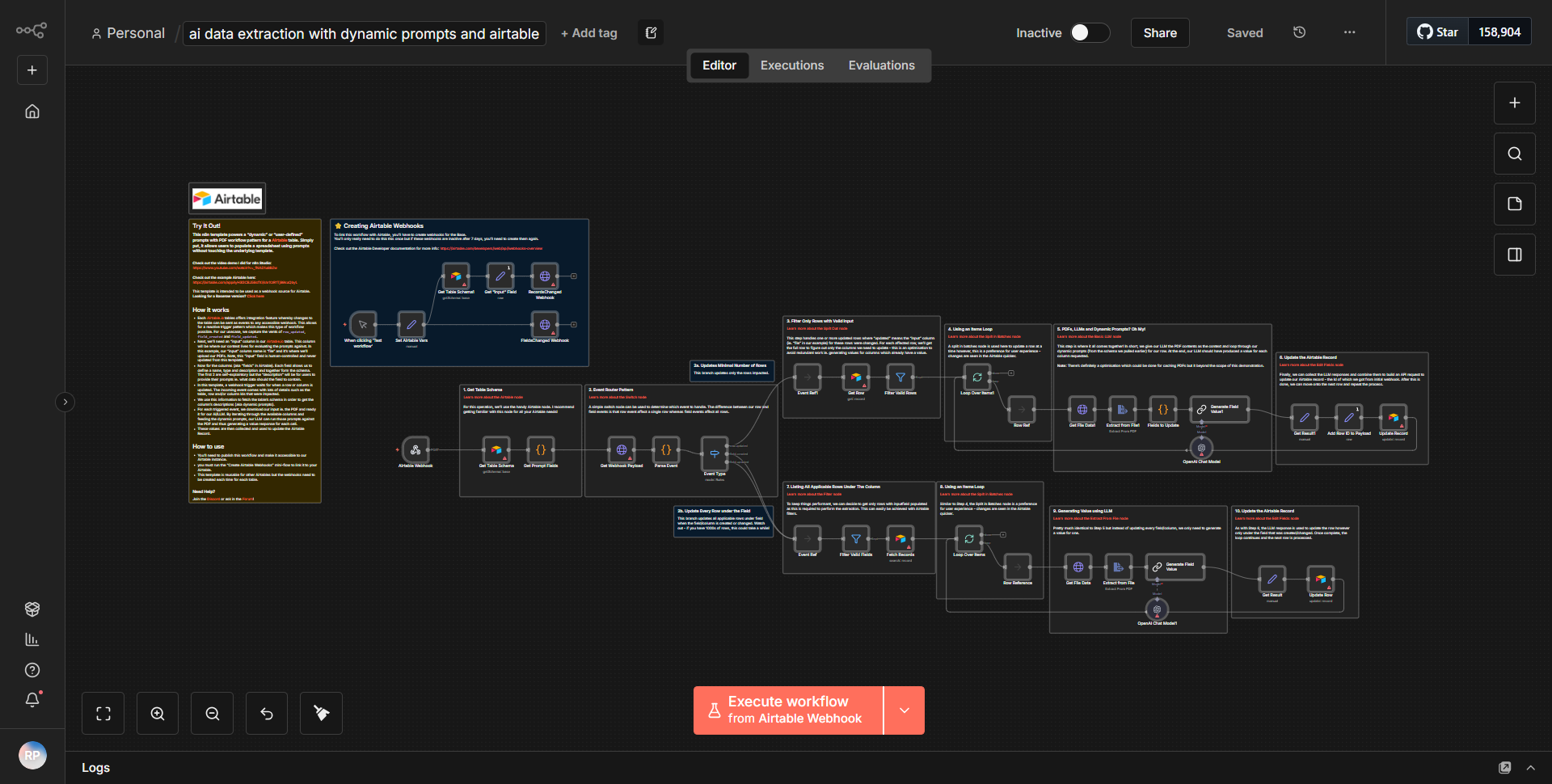

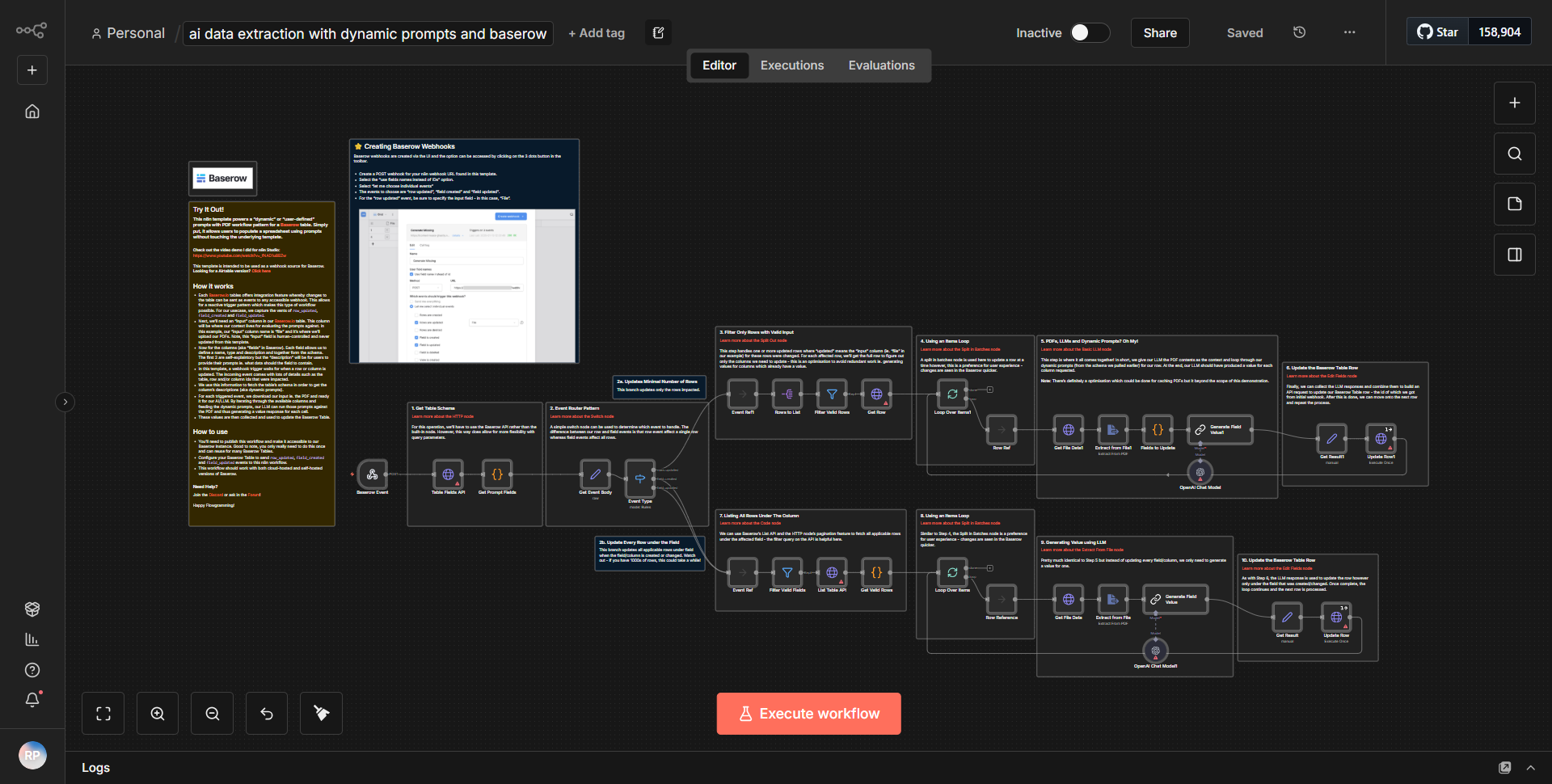

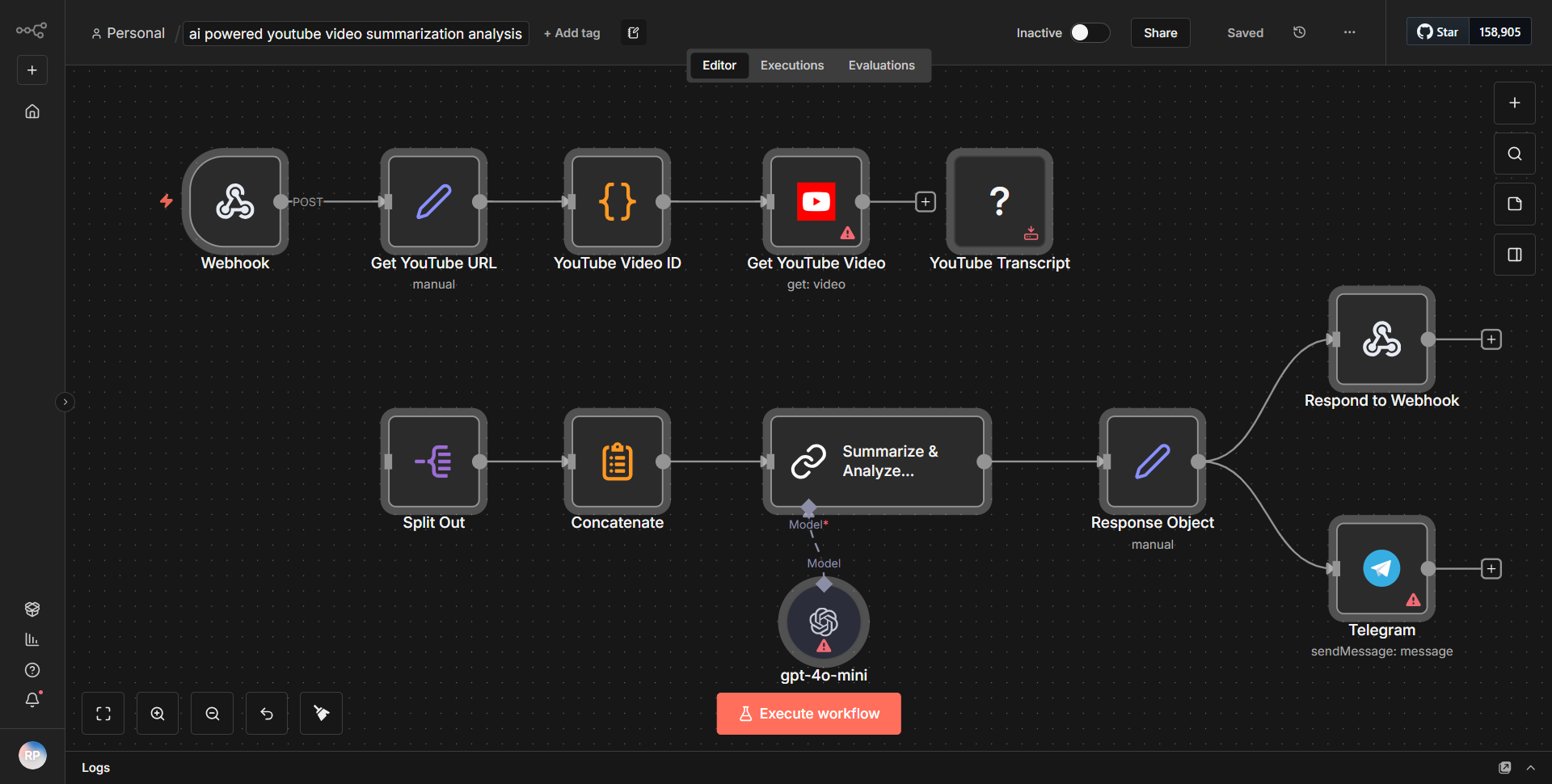

The "Web scraping AI agent" workflow is designed to automate the process of extracting data from web pages using AI capabilities. The workflow begins with an HTTP Request node, which is configured to send a request to a specified URL. This node serves as the entry point for the workflow, allowing it to receive data from the web page that needs to be scraped.

Once the HTTP Request node retrieves the web page content, the workflow proceeds to a Function node. This node processes the HTML response, extracting relevant data using JavaScript code. The Function node is crucial as it allows for custom manipulation of the data, enabling the extraction of specific elements from the HTML structure.

Following the data extraction, the workflow utilizes an AI node, which leverages machine learning capabilities to analyze the scraped data. This node can perform tasks such as summarizing the content or extracting insights based on the data provided. The AI node enhances the workflow by adding intelligence to the scraping process, allowing for more meaningful data interpretation.

Finally, the workflow concludes with a response node that formats and sends the processed data back to the requester or stores it in a specified location, such as a database or a file. This structured flow ensures that data is not only scraped but also intelligently processed and made available for further use.

Key Features

1. Automated Web Scraping:

The workflow automates the process of scraping data from web pages, reducing manual effort and increasing efficiency.

2. Custom Data Processing:

The use of a Function node allows for tailored data extraction, enabling users to specify exactly what information they want from the HTML content.

3. AI Integration:

Incorporating an AI node adds a layer of intelligence, allowing for advanced data analysis, summarization, and insight generation from the scraped content.

4. Flexible Output Options:

The workflow can be configured to send the processed data to various destinations, such as APIs, databases, or files, making it versatile for different use cases.

5. User-Friendly Design:

The visual representation of the workflow in n8n makes it easy to understand and modify, allowing users to adapt the workflow to their specific needs.

Tools Integration

The workflow integrates several tools and services through specific n8n nodes:

- HTTP Request Node:

Used to send requests to web pages and retrieve HTML content.

- Function Node:

Employed for custom JavaScript code execution to manipulate and extract data from the HTML response.

- AI Node:

Utilizes machine learning capabilities to analyze and derive insights from the scraped data.

- Response Node:

Formats and sends the final processed data to the desired output location.

API Keys Required

No API keys or authentication credentials are required for this workflow to function. It operates solely based on the HTTP requests and internal processing without the need for external service authentication.