Identify harmful language in Telegram conversations

Observes Telegram conversations and identifies messages with harmful language through AI moderation.

How it works

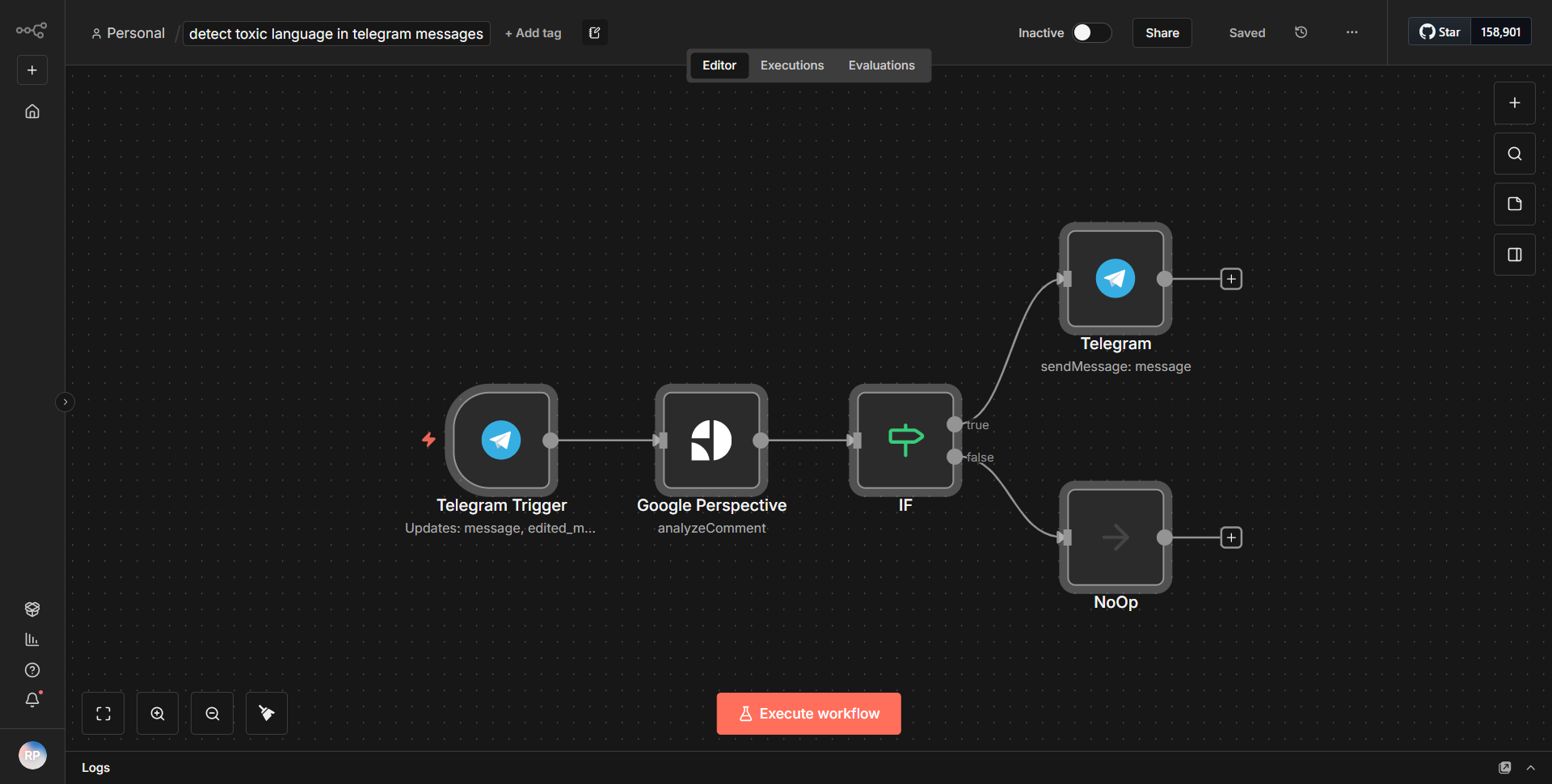

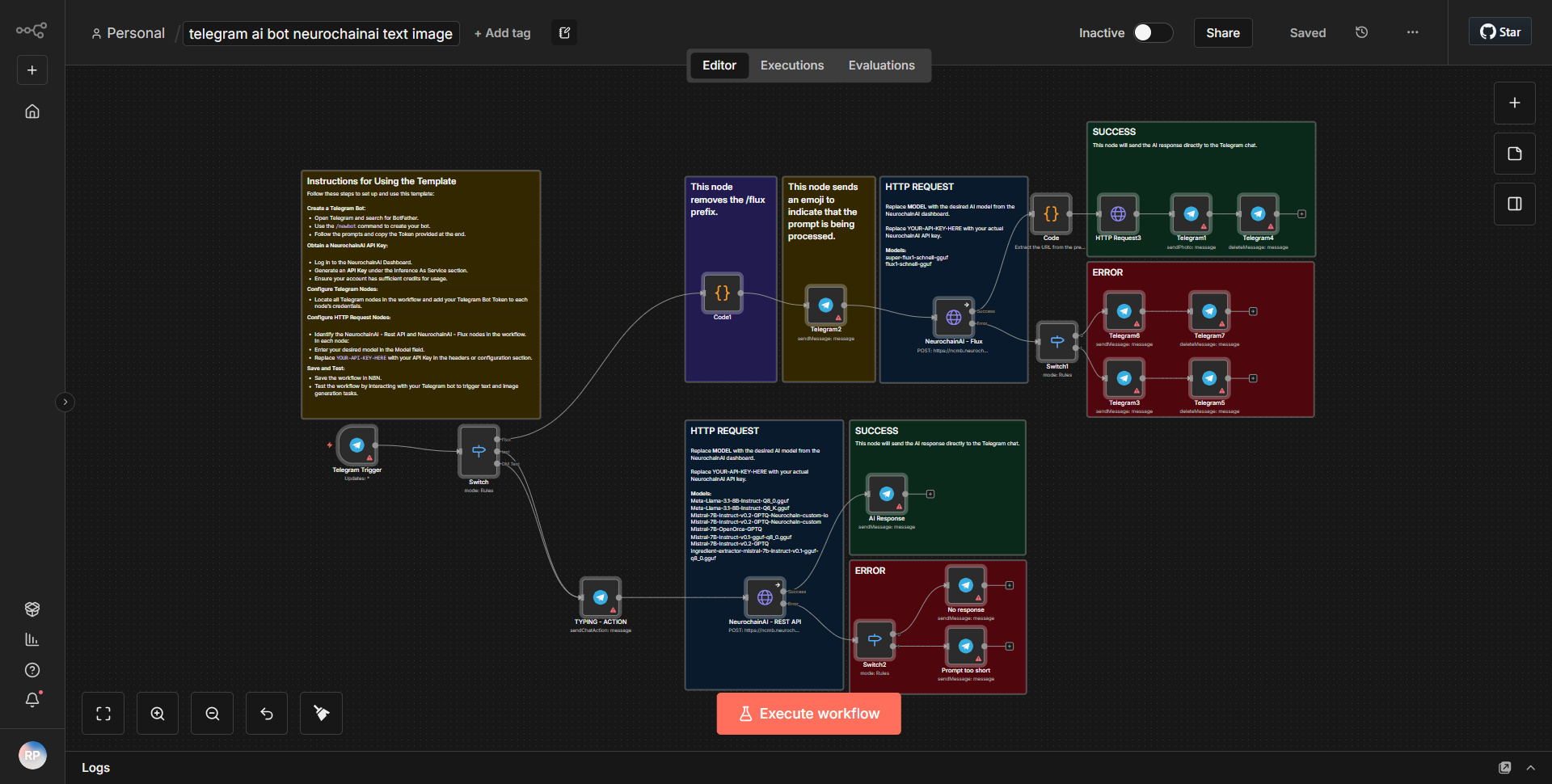

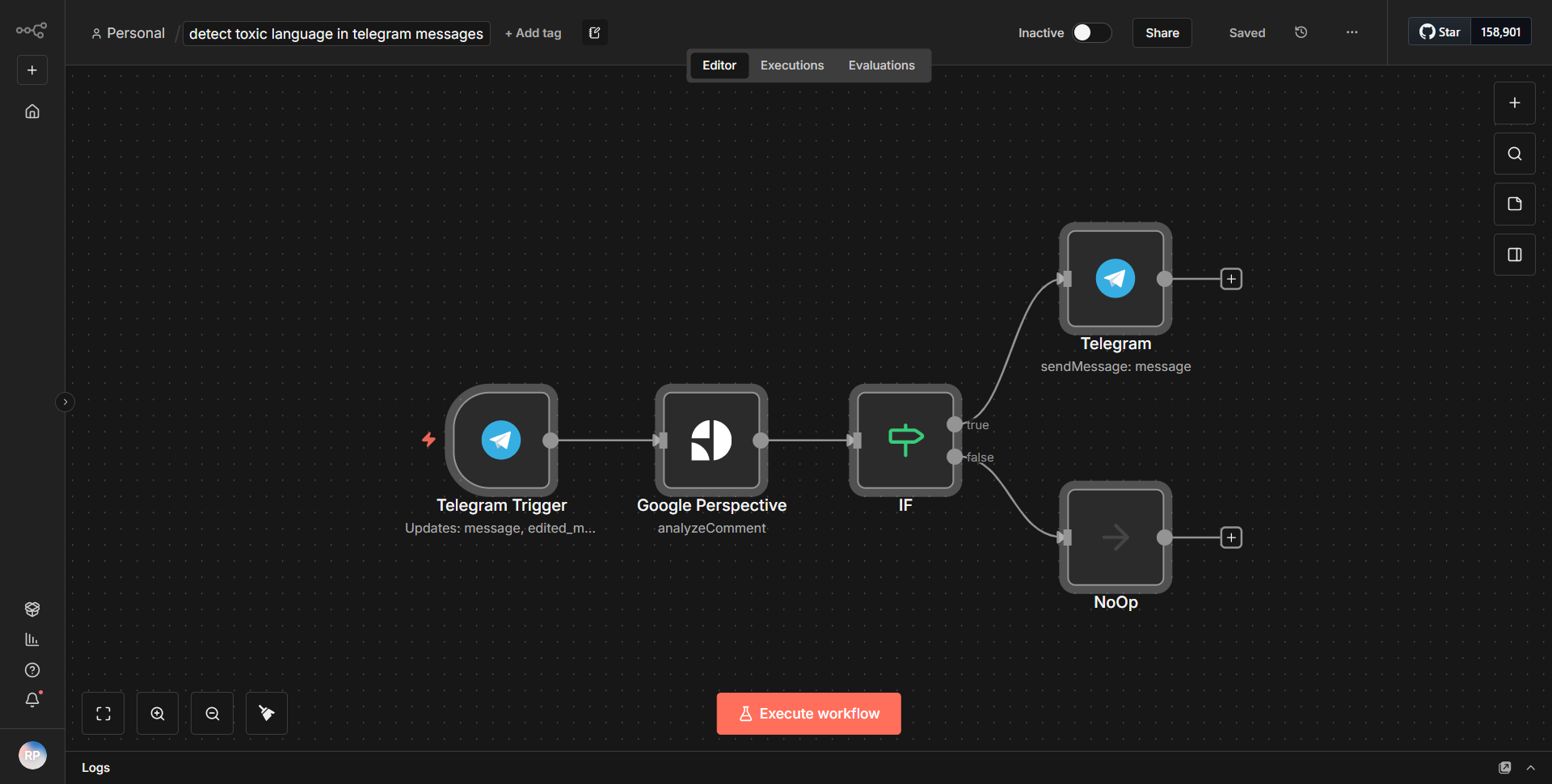

The workflow titled "Identify harmful language in Telegram conversations" is designed to monitor Telegram messages and detect harmful language using AI moderation. The workflow operates through a series of interconnected nodes that facilitate the flow of data and processing.

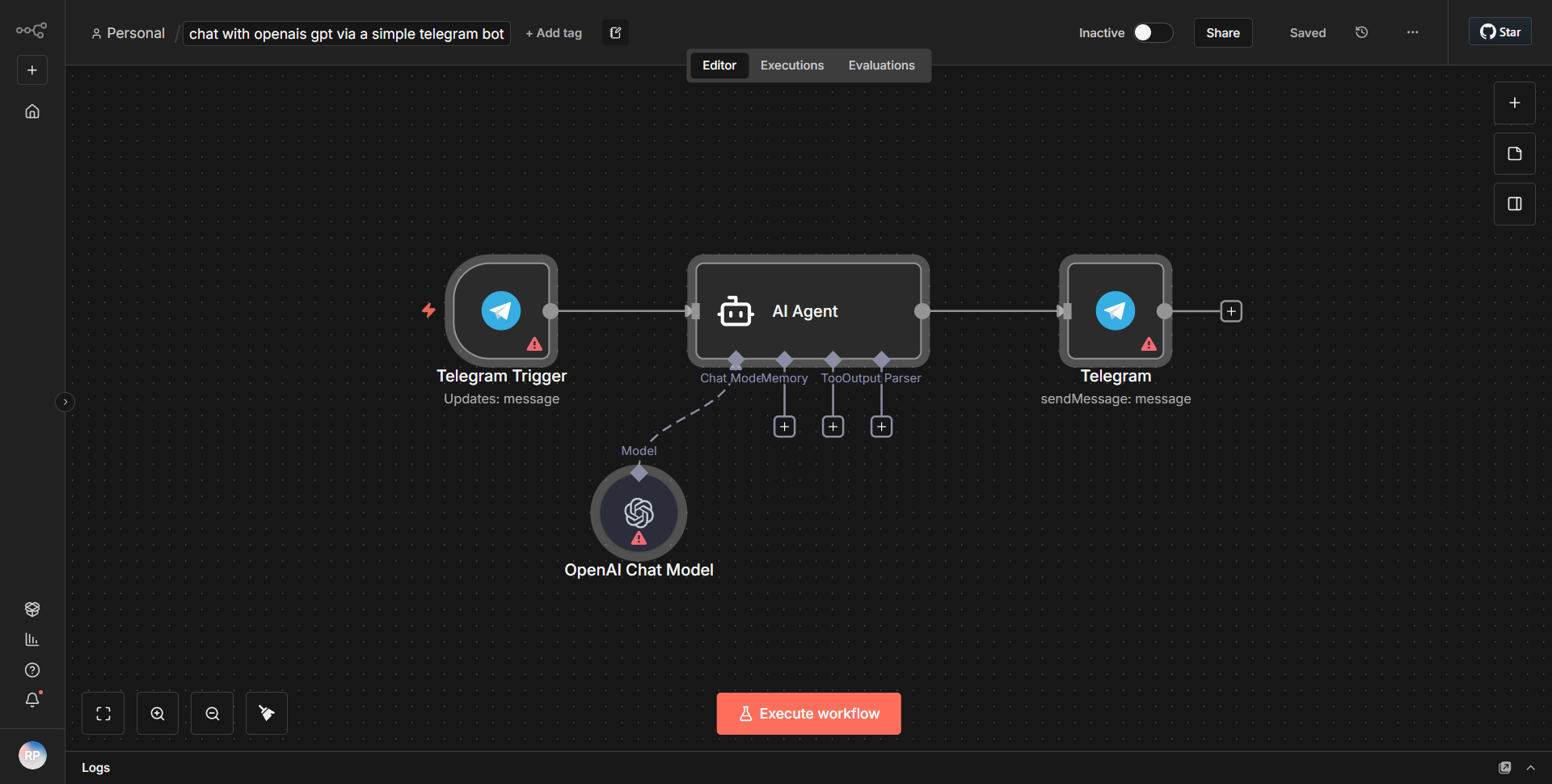

1. Telegram Trigger Node:

The workflow begins with a Telegram Trigger node that listens for new messages in a specified Telegram chat. This node is configured to activate whenever a new message is posted.

2. Function Node:

After capturing a new message, the workflow passes the message content to a Function node. This node is responsible for preparing the data for the next step by extracting relevant information, such as the message text and the user who sent it.

3. HTTP Request Node:

The processed message is then sent to an HTTP Request node, which interfaces with an external AI moderation service. This node is configured to send a POST request containing the message text to the AI service, which analyzes the content for harmful language.

4. IF Node:

Once the AI service returns a response, the workflow utilizes an IF node to evaluate the results. This node checks if the response indicates the presence of harmful language. If harmful language is detected, the workflow proceeds to the next step; otherwise, it terminates the process.

5. Telegram Send Message Node:

If harmful language is identified, the workflow triggers a Telegram Send Message node. This node sends a notification back to the Telegram chat, alerting users about the detected harmful language. The message can include details about the offending message and the user who sent it.

This structured flow ensures that every new message is evaluated for harmful content, and appropriate actions are taken based on the analysis.

Key Features

- Real-time Monitoring:

The workflow continuously monitors Telegram conversations, ensuring immediate detection of harmful language as messages are sent.

- AI Moderation:

Utilizes an external AI service to analyze message content, providing a sophisticated approach to identifying harmful language beyond simple keyword filtering.

- User Notifications:

Automatically alerts users in the Telegram chat when harmful language is detected, promoting a safer communication environment.

- Customizable Triggers:

The workflow can be tailored to monitor specific Telegram chats or groups, allowing for flexible deployment in various contexts.

- Scalability:

Designed to handle multiple messages efficiently, making it suitable for active group chats or channels.

Tools Integration

The workflow integrates several tools and services to function effectively:

- Telegram:

Utilizes Telegram's API for both receiving messages (Telegram Trigger node) and sending notifications (Telegram Send Message node).

- HTTP Request:

This node connects to an external AI moderation service, enabling the analysis of message content for harmful language.

- Function Node:

Processes and prepares data for the HTTP request, ensuring that the message content is formatted correctly for analysis.

- IF Node:

Implements conditional logic to determine the next steps based on the AI service's response.

API Keys Required

To successfully operate this workflow, the following API keys and credentials are required:

- Telegram Bot Token:

Necessary for the Telegram Trigger and Send Message nodes to authenticate and interact with the Telegram API.

- AI Moderation Service API Key:

Required for the HTTP Request node to authenticate requests to the external AI moderation service.

No additional API keys or credentials are needed beyond those specified above.